Kaplan Integrated Testing Platform

Turning a critical pain point into an adoption success story

Why this mattered

➝ Transformed a high-friction, manual process into a trusted self-service capability for nursing school administrators

➝ Improved adoption and engagement by grounding redesign decisions in research and real user needs

➝ Reduced operational burden on support teams while increasing platform value for institutional customers

───────

Impact summary

✅ 84% adoption in first 3 months

📞 25% fewer support calls

📊 43% increase in session time

👩⚕️ 27 nursing faculty in research program

⭐ 100% would recommend the redesigned experience

───────

Executive summary

‣ Built evidence-based case by synthesizing customer interviews, service data, and prototype testing to reprioritize the product roadmap

‣ Facilitated cross-functional design sprint bringing together SMEs, developers, and frontline service team members to solve the problem collaboratively

‣ Led comprehensive testing program with 27 faculty across 3 weeks, iterating continuously between sessions

‣ Validated design decisions in real-time through moderated sessions, refining terminology, context cues, and privacy approaches based on observed behavior and stated needs

Supporting detail

The challenge

Nursing school instructors needed to schedule secure end-of-semester benchmark tests for their students, but the existing self-service tool was so unusable that less than 10% of faculty used it. Instead, they called or emailed service specialists—a time-consuming, error-prone process that frustrated customers and drove up support costs.

Our product roadmap didn’t initially prioritize fixing this. However, customer research revealed test scheduling was a bigger problem than we’d realized, affecting time, effort, engagement, and cost across the board.

My role

I led the effort to pivot our MVP strategy and redesign the entire test scheduling experience from the ground up. This included conducting and synthesizing user research, facilitating cross-functional alignment, and overseeing design and testing through launch.

The approach

Shifting priorities based on evidence

Through customer interviews and internal research, I built a case that test scheduling wasn’t just a usability issue; it was actively damaging the customer relationship and increasing operational costs. I synthesized feedback from three sources:

Direct customer conversations (remote & in-person interviews with faculty)

Internal service data showing volume and nature of support requests

Prototype testing that revealed strong demand for self-service functionality

I presented this evidence to product and business leadership and made the case for reprioritizing our roadmap. The team agreed to make test scheduling the anchor of our MVP launch.

Facilitating cross-functional problem-solving

Rather than designing in isolation, I organized and ran a 3-day design sprint with a cross-functional team: subject matter experts, developers, QA analysts, curriculum specialists, and, critically, experienced service team members who dealt with scheduling issues daily.

I facilitated the team through exercises designed to build shared understanding of the problem and its root causes, then guided structured ideation that moved from individual sketching to small-group brainstorming to concrete storyboards. By the end, we had clear direction and tangible artifacts to move into prototyping and testing.

Testing and iterating

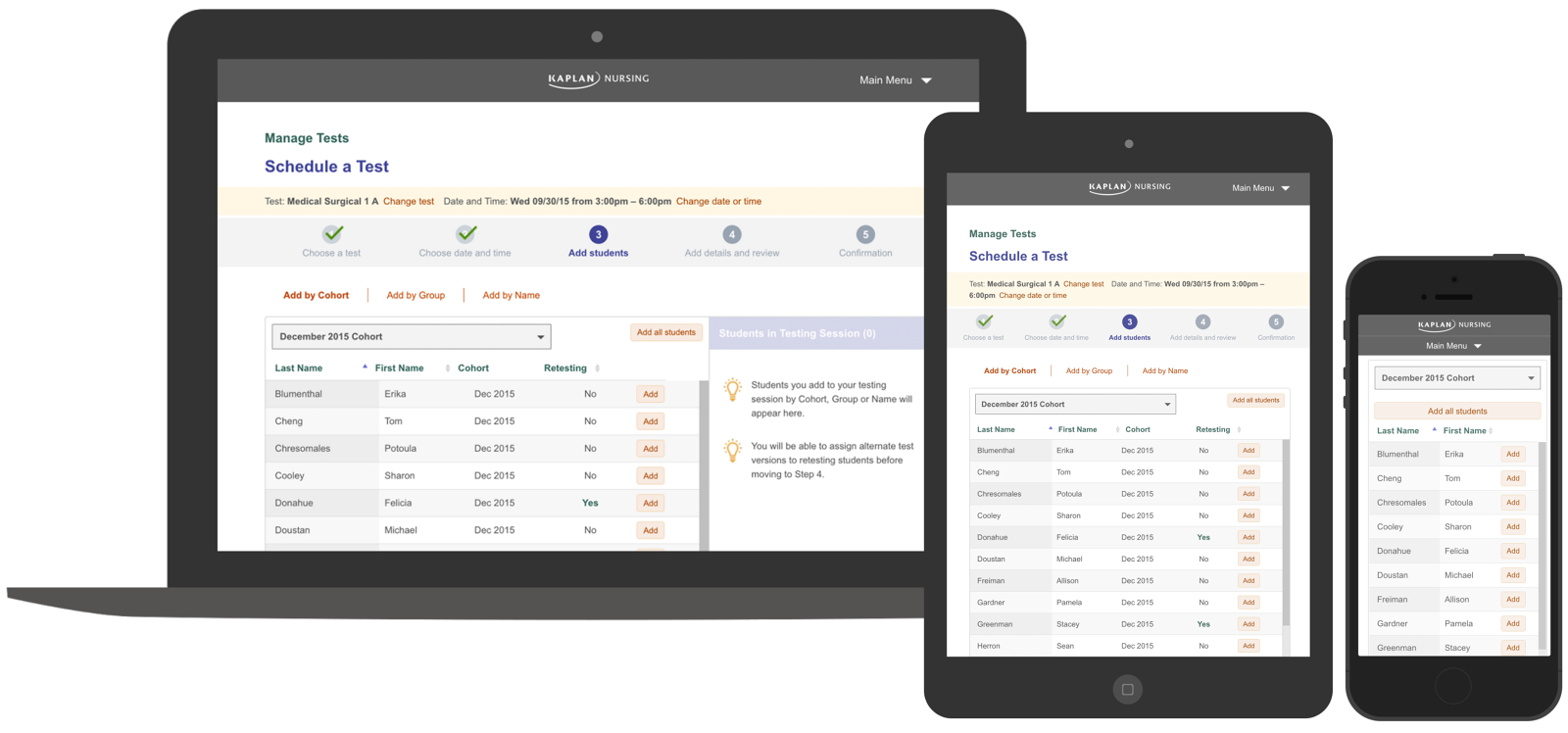

I led a comprehensive user testing program with 27 nursing school faculty across the U.S. over three weeks (24 remote sessions and 3 in-person). I conducted 45-60 minute moderated sessions using high-fidelity prototypes that evolved from clickable to coded as testing progressed.

The iterative approach was continuous. I made refinements between sessions based on what I was learning, moving quickly from identifying issues to testing solutions with the next participant. This allowed me to validate changes in real time rather than waiting until all testing was complete.

Key findings that shaped the final design:

Terminology is extremely important. Faculty were confused by terms like “repeat” vs “retesting” students. I iterated through 4 different versions of this language during testing until landing on “retesting,” which participants consistently understood.

Context is equally critical. The concept of a “testing session” was initially unclear. I revised the helper text and interface cues multiple times until faculty could articulate what it meant and how to use it effectively.

Privacy concerns can shape design direction. Early prototypes flagged students with testing accommodations (ADA), but faculty raised concerns about bias and HIPAA violations. I redesigned the approach to address accommodations without explicitly labeling students.

Demand validation happened organically. When I explained the concept of “groups” (not yet built in the prototype), faculty immediately saw value and described specific use cases, validating that this feature should be prioritized for future development.

The testing confirmed our hypothesis: faculty wanted self-service scheduling and would use an intuitive tool over calling support. The validation came from both stated preference and observed behavior: high completion rates, unprompted positive feedback, and faculty describing exactly when and how they would use it.

The outcome

Within three months of launch:

84% adoption rate among faculty (up from less than 10%)

25% reduction in support calls related to test scheduling

100% recommendation rate for the new experience

Transformed a major pain point into a value driver for customer retention

What I learned

This project reinforced the importance of staying close to customer pain points and being willing to challenge roadmap assumptions when evidence points in a different direction. It also demonstrated the value of bringing the right people into the room early, as insights from our customer service team were critical to understanding the full scope of the problem.